The Seven Most Common Computing Efficiency Functions#

Constant \(\theta (1)\)#

Programs that have constant complexity are easy to identify because they simply do a fixed number of operations. Constant time complexity is the best possible time complexity we could achieve. Here is a simple example, where the cost is always one multiplication and one addition no matter what the input variable x is:

public static int fn1(int x){

return x * x + 2;

}

We say that the run-time complexity of fn1 is in \(\theta (1)\). Even though it may seem equally valid to say that the complexity is in \(\theta (2)\) or \(\theta (3)\), we write it consistently without the presence of any constants (other than \(1\)).

Sometimes we assume that as soon as a loop is present, the complexity jumps into \(θ(n)\) but this is not necessarily the case. Check out this code:

public static int fn2(int n){

for (int i = 0; i < 100; i++){

n = n + i;

}

return n;

}

The run-time complexity of this method fn2 is still constant, no matter what value of \(n\), because the loop body will always execute 100 times.

Logarithmic \(\theta (\log n)\)#

We saw the logarithmic time complexity in the example of binary search. Here is an example where fn3 has logarithmic run-time complexity as well:

public static int fn3(int n){

for (int i = 1; i < n; i = i * 3){

n = n + i;

}

return n;

}

To see this complexity, make a pattern for various values of \(n\) and check how many times the loop body executes.

\(n\) |

Time Loop Body Executes |

|---|---|

\(27\) |

\(3\) (i = 1, 3, 9, 27) |

\(28\) |

\(4\) (i = 1, 3, 9, 27, 81) |

\(29-81\) |

\(4\) (i = 1, 3, 9, 27, 81) |

\(82\) |

\(5\) (i = 1, 3, 9, 27, 81, 243) |

\(83-243\) |

\(5\) (i = 1, 3, 9, 27, 81, 243) |

\(244\) |

\(6\) (i = 1, 3, 9, 27, 81, 243, 729) |

\(245-729\) |

\(6\) (i = 1, 3, 9, 27, 81, 243, 729) |

\(730\) |

\(7\) (i = 1, 3, 9, 27, 81, 243, 2187) |

We see the effect of the logarithm when we look at this table, as the range during which the same complexity applies gets larger and larger as we look down the table.

Linear \(\theta (n)\)#

We saw this time complexity in the example of sequential search. Here is another example where fn4 also has linear run-time complexity:

public static int fn4(int n){

for (int i = 1; i < n / 2; i++){

n = n + i;

}

return n;

}

The loop body will always execute \(⌊n / 2⌋\) times. Since we drop constants, this means the complexity is in \(\theta (n)\).

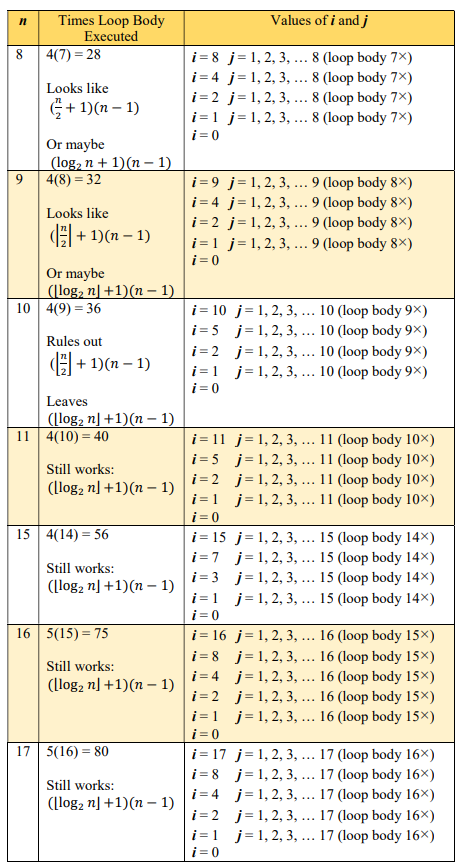

Linear Logarithmic \(\theta (n \log n)\)#

The usual quicker sorting algorithms fall in this category, of which we will see an example in a future chapter. Here is an example of a Java method whose run-time is in \(θ(n \log n)\):

public static int fn5(int n){

for (int i = n; i > 0; i = i / 2){

for (int j = 1; j < n; j++){

n = n + i;

}

}

return n;

}

A table can be used to visualize what is happening to help us build the model for the complexity.

Quadratic \(\theta (n^2)\)#

Adding two square matrices runs in quadratic time, as per this code:

public static int[][] addSquareMatrices(int[][] matrix1, int[][] matrix2){

int n = matrix1.length;

int[][] answer = new int[n][n];

for (int row = 0; row < n; row++){

for (int col = 0; col < n; col++){

answer[row][col] = matrix1[row][col] + matrix2[row][col];

}

}

return answer;

}

Bubble Sort#

Some of the first sorting algorithms you would have learned (bubble sort, insertion sort, and selection sort) run in quadratic time. Here is the bubble sort algorithm in Java:

public static void bubbleSort(int[] aList){

int temp;

for (int i = 0; i < aList.length - 1; i++){

for (int j = 1; j < aList.length - i; j++){

if (aList[j - 1] > aList[j]){ //swap them

temp = aList[j - 1];

aList[j - 1] = aList[j];

aList[j] = temp;

}

}

}

}

Cubic \(\theta (n^3)\)#

The multiplication of two square matrices has a run-time that is cubic. Here is the Java code:

public static int[][] multSquareMatrices(int[][] matrix1, int[][] matrix2){

int n = matrix1.length;

int[][] answer = new int[n][n];

int oneSum;

for (int row = 0; row < n; row++){

for (int col = 0; col < n; col++){

oneSum = 0;

for (int place = 0; place < n; place++){

oneSum = oneSum + matrix1[row][place] * matrix2[place][col];

}

answer[row][col] = oneSum;

}

}

return answer;

}

See the triple loop, with each loop executing for \(n\) times.

Exponential \(\theta (2^n)\)#

Using simple loops, it is a bit difficult to concoct an exponential time complexity. However, exponential time complexities arise easily in recursion, a topic to be covered in later chapters. One example to consider is the generation of binary numbers with a given number of digits, \(n\). Since we know there are \(2^n\) such numbers, we can make Java code with exponential run-time. The code is rather long, but here it is:

/**

* Print the 1d array, with no spaces but newline at end.

* @param anArray - 1d array of integers

*/

public static void printArray(int[] anArray){

for (int i = 0; i < anArray.length; i++){

System.out.print(anArray[i]);

}

System.out.println();

}

/**

* Adds 1 to the binary number stored in binArray, one bit per cell.

* @param binArray - 1d array of 0's and 1's making a binary number

* @return = true if there is a carry out of right; false otherwise

*/

public static boolean add1(int[] binArray){

int carry = 1;

for (int i = binArray.length - 1; i >= 0; i--){

binArray[i] = binArray[i] + carry;

if (binArray[i] > 1){

carry = 1;

binArray[i] = binArray[i] & 1; //keep only last digit

}

else{

carry = 0;

}

}

return (carry > 0);

}

/**

* Print the binary numbers from 0 to the maximum that fits in

* the given number of bits.

* @param numBits - the number of bits in each number

*/

public static void countBinary(int numBits){

int[] binNum = new int[numBits]; //inializes to 0's

boolean carryOut = false;

while (! carryOut){

printArray(binNum);

carryOut = add1(binNum);

}

}

Similar code could be used for systematically trying to guess someone’s password. Each extra letter we put into our passwords increases the time required to generate all combinations by 94 times (I just counted 47 keys on my keyboard, not including invisible characters, and each produces 2 characters). Thus a password of length 4 requires time in the order of

but doubling the length of the password would require time in the order of

This is exponential growth.

Practice Questions#

Counting assignments to

x, and given the following Java code forfn:public static void fn(int n){ int x = 10; for (int i = 0; i < n; i++) { for (int j = n; j > 0; j = j - 2) { x = x + i + j; } } }

a. What is the exact run-time complexity of

fnfor general \(n > 10\)?

b. What is the run-time complexity using order notation?Counting assignments to

answer, and given the following Java code forfn:public static void fn(int n){ int answer = 0; int i = n; while (i > 0) { answer = answer + n * i; i = i / 5; } }

a. What is the exact run-time complexity of

fnfor general \(n > 10\)?

b. What is the run-time complexity using order notation?Counting assignments to

answer, and given the following Java code forfn:public static void fn(int n){ int answer = 0; int i = n; while (i > 0) { answer = answer + n * i; i = 5 / i; //difference from above } }

a. What is the exact run-time complexity of

fnfor general \(n > 10\)?

b. What is the run-time complexity using order notation?With pencil-and-paper, not with a machine, run the

bubbleSortalgorithm on this list, drawing the new list for each iteration of the outer loop:[89, 12, 56, 24, 69]

With pencil-and-paper, not with a machine, run the

bubbleSortalgorithm on this list, drawing the new list for each iteration of the outer loop:[10, 18, 21]

Find the exact number of key comparisons in the

bubbleSortalgorithm given foraListof size 10 andaListof size \(n\).Write Java method

hasDuplicatewhich takes an integer array and returns true only if there is a duplicate in the array. What is the worst-case run-time complexity of your algorithm and when does this occur?Write the Java code for selection sort on a list of integers, so that the list ends up sorted in ascending order. Selection sort moves through the list looking for the smallest item. It swaps that item into the first position and repeats looking for the smallest item on the remainder of the unsorted list and swapping into the first position of the unsorted list, and so on until the entire list is sorted. Here is an algorithm:

Algorithm selectionSort (\(list\), \(len\))

Assumes: \(list\) is indexed starting at 0

\(\;\;\;\;\;\)\(\;\;\;\;\;\)\(\;\;\;\) \(len\) is the length of \(list\)

Start \(counter\) at 0

As long as \(counter < len - 1\) do following:

\(\;\;\;\;\;\) a. \(min ← list[counter]\)

\(\;\;\;\;\;\) b. \(minIndex ← counter\)

\(\;\;\;\;\;\) c. Start \(otherCount\) at \(counter + 1\)

\(\;\;\;\;\;\) d. As long as \(otherCount < len\) do following:

\(\;\;\;\;\;\)\(\;\;\;\;\;\) i. If \(list[otherCount] < min\) then item is new smallest so

\(\;\;\;\;\;\)\(\;\;\;\;\;\)\(\;\;\;\;\;\) • \(min ← list[otherCount]\)

\(\;\;\;\;\;\)\(\;\;\;\;\;\)\(\;\;\;\;\;\) • \(minIndex ← otherCount\)

\(\;\;\;\;\;\)\(\;\;\;\;\;\) ii. Increment \(otherCount\)

\(\;\;\;\;\;\) e. Exchange:

\(\;\;\;\;\;\)\(\;\;\;\;\;\) i. \(list[minIndex] ← list[counter]\)

\(\;\;\;\;\;\)\(\;\;\;\;\;\) ii. \(list[counter] ← min\)

\(\;\;\;\;\;\) f. Increment \(counter\)

Show how selection sort work on this list, by writing the list after each pass:

{98, 12, 32, 3, 7, 19, 54, 87}

In what complexity class is selection sort’s run-time?

Write the Java code for insertion sort on a list of integers, so that the list ends up sorted in ascending order. Insertion sort assumes that the first item in the list is sorted. It goes to the second item and checks towards the left to see where it should be placed moving any item that is larger over by one to make room. This pattern continues until the list is sorted. Here is an algorithm:

Algorithm insertionSort (\(list\), \(len\))

Assumes: \(list\) is indexed starting at 0

\(\;\;\;\;\;\)\(\;\;\;\;\;\)\(\;\;\;\) \(len\) is the length of \(list\)

Start \(counter\) at 1. It will represent the location of the item to place, and we assume position 0 has a correct item (a list of one element is sorted).

As long as we don’t go off the right end of our list, or even get to the last element, i.e. \(counter < len - 1\), do the following:

\(\;\;\;\;\;\) a. \(newElement ← list[counter]\)

\(\;\;\;\;\;\) b. Move left by setting \(location ← counter – 1\)

\(\;\;\;\;\;\) c. Shuffle items right as long as there are more items to the left

\(\;\;\;\;\;\)\(\;\;\;\) in the list and the item is bigger than the item we are placing,

\(\;\;\;\;\;\)\(\;\;\;\) i.e. \(location >= 0\) and \(list[location] > newElement\),

\(\;\;\;\;\;\)\(\;\;\;\) do following:

\(\;\;\;\;\;\)\(\;\;\;\;\;\) i. \(list[location + 1] ← list [location]\)

\(\;\;\;\;\;\)\(\;\;\;\;\;\) ii. Decrement \(location\)

\(\;\;\;\;\;\) d. Place the current item with \(list[location + 1] ← newElement\)

\(\;\;\;\;\;\) e. Find next item to place by incrementing \(counter\)

Show how insertion sort work on this list, by writing the list after each pass:

{98, 12, 32, 3, 7, 19, 54, 87}

In what complexity class is insertion sort’s run-time?