1. What does the case of the Audi 5000 reveal about human error and design?

2. What are some taxonomies of error?

3. How are error and reliability defined?

4. How are errors analyzed? How may redundancy reduce errors?

5. How does human error relate to systems?

6. What human errors affected Air Transat flight 236?

|

Case Study: The Audi 5000 |

- sedan introduced 1978

- Nov. 23, 1986: 60 Minutes aired segment “Out of Control” (stories also on NBC’s The Today Show, and CBC’s MarketPlace)

- sudden __________ ____________ (SUA): spontaneous, uncontrolled acceleration of a vehicle when shifted from park to drive or reverse, often with apparent loss of braking

- implicated idle stabilizer control fuel system component which supposedly triggered “_________ malfunctions” without warning

- 13 owners complained of SUA before, 1,400 in next month

- over 100,000 vehicles were recalled

- by 1989, legal claims totalled $5 billion

Questions not asked:

• Why were there more of these incidents among drivers who had relatively little __________ driving the Audi 5000? (most incidents occur within first 2,000 miles of cars’ life)

• Why no reported problems with Audi 4000 Quattro? (had identical idle stabilizer mechanism)

• Why did this only happen in cars starting at ____?

• Why were many ___________ pedals bent, even snapped off?

Audi’s explanation:

• Audi engineers examined 270 incidents

• only 6 idle-speed stabilizers were found defective--but would not cause rapid, unexpected acceleration

• 2.2-litre, 5 cylinder, 110 hp engine cannot ________ brakes (drivers claimed to be standing on brakes!)

• demonstrations made for NBC and CBS: full-throttle acceleration runs at 30 and 50 mph stopped by brakes

• precipitating cause: driver error (___________ pedal pressed instead of brake)

Pollard & Sussman (National Highway Traffic Safety Administration) (1989): |

- explored electromagnetic and radio frequency interference and malfunctions in cruise control, electronic idle-speed control systems, computer-controlled fuel injection systems, transmissions, and brakes

- findings:

• no mechanism (besides actuating ___ _____) would open throttle enough to accelerate any of the cars at full power

• “minor” 2 second surges of ~0.3 G (~3 m/s2) caused by electronic faults in idle stabilizer systems

• surge could _______ driver enough to push accelerator instead of brake

• travel of pedals and height off the floor make it possible for engine torque to overtake brake torque when pedals applied

• unusually problematic _________ of pedals

- conclusions:

• the problem of SUA is complex, involving an interaction among the driver, external distractions, and the design of the car itself

• most incidents involved “pedal ______________”: driver placing foot on wrong pedal

• aggravated by pedal design defects in some vehicles

Pedal design:

- pedals were coplanar, for easy “heel/toe” operation; RT is faster (Casey & Rogers, 1987)

- redesigned due to confusion in 1983

- pedal configuration related to (rare) pedal errors of 0.2% (Rogers & Wierwille, 1988)

- __________ misperceived to be right of centre of vehicle--but not related to pedal errors (Vernoy, 1989)

- drivers have familiarity with pedals aligned more to the right

- this is a ______-induced error (DIE): the design itself causes an error to occur (as opposed to operator-induced error, which is due to inadequate performance by the user)

Postscript:

- Audi’s sales dropped from 74,000 (1984) to 12,000 (1991)

- Audi did away with “5000” designation

- renamed sedan “100” in 1989 and “A6” in 1995

- a class action lawsuit filed in 1987 by about 7,500 owners remains _________

- SUA not a unique problem:

• >2,000 complaints about GM models built 1973-1986

• incidents reported by owners of Toyotas, Renaults, Mercedes-Benzes, Nissans, Saabs, & Volvos

• 1997: Chrysler admitted that Jeep Cherokee & Grand Cherokee had UA problem; introduced retrofit for brake-park shift interlock (brake pedal must be depressed to shift into gear)

• 2009-2011: Toyota issued recalls for over 7 million vehicles, including Camry and Corolla, due mainly to driver error/pedal misapplication (Kane et al., 2010; NHTSA, 2011)

• 2016: Tesla Model X black box data showed accelerator pedal was abruptly increased to 100%

Human error: a failure to perform a task satisfactorily, and that failure cannot be attributed to factors beyond the human’s immediate control.

If human factors information is ignored, it will lead to difficulty in performing the task--ultimately even human error.

• flight crew error was the primary factor contributing to 357 (77.8%) of the 459 large commercial aircraft incidents that occurred worldwide between 1959 and 1995 (Boeing, 1996)

• decreased to 56% between 1995 and 2004

• 52% of “root causes” identified in 180 _______ power plants are due to human error (Reason, 1990b)

• more than 38% of self-reported __________ accidents are due to human error (Järvinen & Karwowski, 1993)

• 88% of all accidents are caused primarily by an individual worker (Rasmussen, Duncan, & Leplat, 1987)

• more than 70% of anesthetic incidents in the operating room are related to the operator (Woods et al., 2010)

• between 30% and 80% of _________ accidents are due to human error (Senders & Moray, 1991)

- may be ________________ descriptions: concerned with what the error was

- may be _____________ descriptions: concerned with the information processing that leads to error (i.e., the underlying causes)

Goals:

• unintentional vs. ___________

e.g. error on a test vs. exceeding the speed limit

Outcomes:

• ___________: error in which undesirable consequences occurred

e.g., patient prescribed incorrect medicine, which causes an adverse event

• _________: error with possibility for undesirable consequences, but none actually occurred

e.g., patient prescribed incorrect medicine, but pharmacist catches the error

- a recovered error today could be an unrecovered error tomorrow, unless some changes are made

Human Error Categories (Swain & Guttman, 1983):

• error of __________: a person performs a task or step that should not have been performed

a.k.a. type I error/false positive/false alarm

e.g., hitting thumb with hammer

• error of ________: a person fails to perform a task or step

a.k.a. type II error/false negative/miss

e.g., forgetting to unplug coffeemaker

• sequential error: a person performs a task or step out of sequence

e.g., lighting a fire before opening fireplace flue damper

• ____ error: a person performs a task or step, but too early, too late, or the wrong speed

e.g., going through intersection on a red light, or speeding

• __________ act: a person introduces some task or step that should not have been performed (action from an unrelated series)

e.g., lighting a fire, then unplugging coffeemaker

Origin of error (Meister, 1971):

• ______ error: designer does not take into account human abilities

e.g., Norman door handle designed to seem that it should be pulled, but you actually have to push

• assembly or _____________ error: system not built according to design

e.g., Takata airbag manufacturing defect caused injuries to drivers when they deployed

• ____________/maintenance errors: system not installed or maintained correctly

e.g., faulty maintenance update to CrowdStrike security software took down 8.5 million computers

• _________ error: system not operated according to intended procedure

e.g., a metric-imperial unit conversion error caused the Mars Climate Orbiter to burn up

James Reason (1990b):

- distinguishes between errors of execution and errors of intention

- errors of execution:

• ____: unintentionally performing an incorrect action

e.g., stepping on a banana peel and falling down

▸ error of action execution

• mode error: performing the correct response, but while in the wrong mode of operation; a kind of slip

e.g., in paint software, attempting to draw something while using the eraser tool

▸ error of attention/memory

• _____: neglecting to perform a required action

e.g., forgetting to take your medicine twice a day

▸ error of memory

- errors of intention:

• _______: selecting an action, and carrying it out successfully--but it is the wrong action

e.g., smoking banana peels to try and get high

▸ error of planning (choosing wrong decision-making rule, or lacking background knowledge)

▸ may be due to memory/perception/cognition

• _________: intentionally contravening a standard (operating procedures, codes of practice, laws, etc.) with no intent to harm

e.g., reusing your passwords

▸ implies a (governing) social context

• malicious violation: has intent to harm

e.g., sabotage, terrorism

▸is this necessarily an error?

Error measurement (Chapanis, 1951):

• ________ error: differs from trial to trial

• ________ error: always the same; easier to predict and correct

Complete analysis of error (Swain & Guttman, 1980):

1. Describe system _____ and functions

2. Describe situation

3. Describe tasks and ____

4. Analyze tasks for which errors are likely

5. Estimate ___________ of each error

6. Estimate probability that the error is not corrected

7. Devise means to increase ___________

8. Repeat steps 4 - 7 in light of changes

- different types of errors may need different types of actions to prevent them

Error: an action (or lack of action) that violates tolerance limit(s) of the system

• defined in terms of system requirements and capabilities

• doesn’t imply anything about humans; may be ______ flaw

Error Probability (EP), aka Human Error Probability (HEP):

EP = (# of errors) ÷ (total # of opportunities for error)

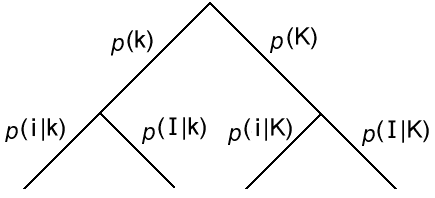

Calculation of HEP: THERP (Technique for Human Error Rate Prediction; Swain, 1963)

- perform an _____ ____ analysis

- start at top with probability of correct/incorrect action

- next level: probability of error, given last action

- these are ___________ probabilities (are not independent)

- sum of partial error probabilities at bottom is overall error probability

e.g., opening a hotel room door using a keycard

• lowercase letter = successful outcome

• UPPERCASE letter = failure

• conditional probability: p (b|a) means the probability of b given a

k = choose correct keycard

K = choose incorrect keycard

i = insert keycard correctly

I = insert keycard incorrectly

p (i|k) is probability of getting correct keycard into door lock correctly (only correct outcome)

p (_____) = 1 - p (i|k)

Probabilities of specific actions can be found in tables:

e.g., HEPs (Swain & Guttman, 1980)

• select wrong control in group of labeled identical controls = .003

• failure to recognize incorrect status of item in front of operator = .01

• turn control wrong direction, under stress, when design violates population norm = .5

___________: probability of a successful outcome of the system or component

• is also defined in terms of system requirements

• thus, to evaluate a system we must know _____ and purposes of the system

R = (# of successful operations) ÷ (total # of operations)

(also, R = 1 - EP)

In general, reliability goes down as number of components goes up (i.e., as __________ increases).

Components in ______: if any component fails, the whole system fails

- vulnerable to a single point of failure (SPOF)

e.g., four tires on a car

Rs = R1 × R2 × ... × Rn

e.g., all components have Ri = .90:

n = 1: Rs = .90

n = 2: Rs = .9 × .9 = .81

n = 3: Rs = .9 × .9 × .9 = .73

n = 4: Rs = .94 = .66

______ __________: multiple independent components operate all the time, but only one is needed

e.g., Airbus A330 can fly on only one engine

e.g., traffic signals have multiple lights

e.g., RAID level 1: data is mirrored across two hard drives

- failure occurs only when all components fail

- has high fault tolerance

Rr = 1 - Π (1 - Ri)

e.g., compare two systems: one with a backup, the other with active redundancy; Ri = .90

• having backup (in series): Rs = .9 × .9 = .81

• redundant: Rr = 1 - (1 - .9)2 = .99

Improving reliability:

- hardware factors: KISS (Keep It ______, ______)

• availability rates (a.k.a. mission-capable rates) of military aircraft

F-111D Aardvark (early “glass cockpit,” 1967): ˜33%

F-35 Lightning II (2016): ˜50%

A-10 Thunderbolt II (“Warthog”) (1977): ˜66%

- human factors:

• use human factors knowledge in ______

• use human as _________ system component (Human Reliability Analysis)

Pros & Cons:

![]() __________ error incidence to aid system design

__________ error incidence to aid system design

![]() can give relative error (for different parts of a task)

can give relative error (for different parts of a task)

![]() not reliable for ________ error judgments (variance of 10×)

not reliable for ________ error judgments (variance of 10×)

![]() errors usually self-detected--reliability & validity?

errors usually self-detected--reliability & validity?

![]() assumes error is ___________ of other errors

assumes error is ___________ of other errors

Failure task analysis: analyze all anticipated failures/errors

• _________: make it impossible (!?) to commit errors

• prevention: minimize possibility of errors

• ____ ____: minimize effects of errors

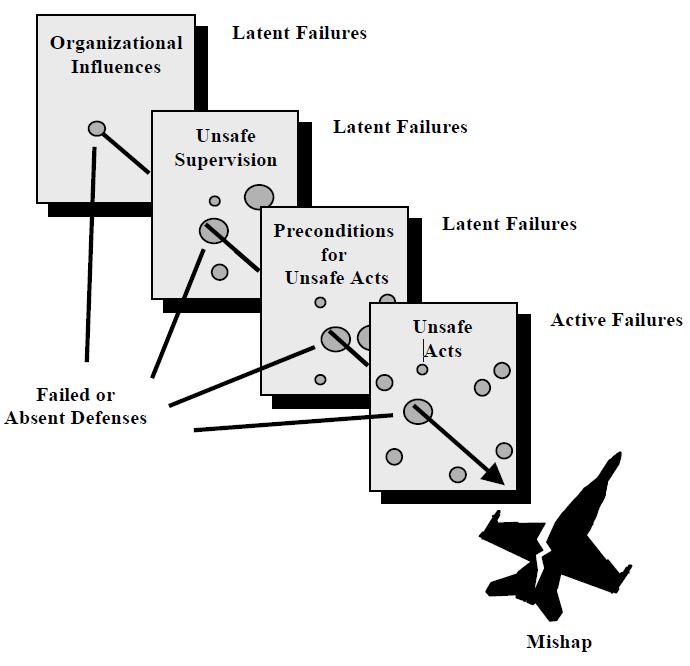

Human Factors Analysis and Classification System (HFACS)

(Shappell & Wiegmann, 2000; Wiegmann & Shappell, 2003)

- studies of accidents reveal that human error is rarely the only factor

- rather, accidents are caused by ________ factors

e.g., improper equipment design, environmental factors, poor supervision, or interactions among factors

- HFACS is a comprehensive framework for understanding error, originally developed for the U.S. Navy and Marine Corps as an accident investigation and data analysis tool

- allows human error to be seen in the context of a system

- based on James Reason’s (1990a, 1990b) “_____ ______” model of human error:

• slices represent multiple layers of defences against error in a system

• ______ failures: existing flaws in defences (holes); not immediately apparent; may lie dormant within a system (“an accident waiting to happen”)

• ______ failures: unsafe acts have immediate effect on system performance; tend to be associated with front-line operators (“the last person who touched it”)

Level 1: Unsafe ____

• errors

- perceptual, skill-based, or decision errors

e.g., black-hole approach illusion causes misjudgment of altitude at night

• violations

- routine or exceptional departures from training rules

e.g., flying under a bridge

Level 2: _____________ for Unsafe Acts

• situational factors

- physical environment, or tools/technology

e.g., poor weather conditions

• condition of operators

- adverse mental or physiological states, or physical/mental limitations

e.g., distraction

• personnel factors

- communication, coordination, & planning; or fitness for duty

e.g., poor communication among flight crew members

Level 3: ___________ Factors

• inadequate supervision

e.g., personality conflict

• planned inappropriate operations

e.g., improper crew pairing (very senior captain with very junior first officer)

• failure to correct known problem

• supervisory violation

e.g., permitting someone to operate an aircraft without current qualifications

Level 4: ______________ Influences

• resource management

e.g., management decisions about safety vs. on-time performance

• organizational climate

e.g., formal accountability for actions

• operational process

e.g., use of standard operating procedures

- Human Factors Intervention matriX (HFIX; Shappell & Wiegmann, 2006, 2007, 2009) used to design interventions for improving safety

• considers decision errors, skill-based errors, perceptual errors, and violations

• at the levels of operational/physical environment, task/mission, tools/technology, individual/team, and supervisor/organization

e.g., “How can I redesign a checklist to reduce violations?” (violations and tools/technology)

- pros & cons:

![]() applied to accident investigation, understanding, & prevention in over 1,000 military aviation accidents

applied to accident investigation, understanding, & prevention in over 1,000 military aviation accidents

e.g., the primary causes of commercial aviation accidents are Preconditions for Unsafe Acts - Situational Factors - Physical Environment (58.0%), and Preconditions - Personnel Factors - Crew Resource Management (10.7%) (Shappell et al., 2007)

![]() criticized for _______________ human actions as “correct” or “incorrect”

criticized for _______________ human actions as “correct” or “incorrect”

The Flight

- Airbus A330-243, flight from Toronto’s Pearson Airport to Lisbon, Portugal

- departed at 8:52 p.m. EDT on August 24, 2001

- pilots: Captain Robert Piché (16,800 hours of flying time) and First Officer Dirk DeJager (4,800 hours)

- cruising at Flight Level 390 (39,000 feet)

- 4 hours into the flight, pilots noticed fuel imbalance between left and right inner-wing tanks

- implemented fuel cross-feed without consulting _________ for fuel imbalance

- decided to ______ to Azores

- 28 minutes later, right engine flamed out; 13 minutes later, left engine flamed out

- used hydraulic/electrical power from ram air turbine to glide for 19 minutes over 65 nautical miles (120 km)

- made engines-out “____-_____” nighttime landing at Lajes, Azores, Portugal

- 8 (of 10) tires ruptured; 11 people injured (out of 306)